Power, trust, and the illusion of intelligent states

For centuries, power in society was visible. It had a face, a building, a process. Decisions were made by people, recorded on paper, and—at least in principle—could be questioned. You might disagree with a tax, a fine, or a ruling, but you could ask why. Someone, somewhere, could explain.

Today, governments are entering a new era of power: algorithmic power. Artificial intelligence is being introduced into systems that assess risk, distribute resources, detect fraud, prioritize cases, and increasingly influence decisions that shape citizens’ lives. The promise is seductive: faster services, fewer errors, smarter states.

But something fundamental is changing.

In many of these systems, decisions emerge from models no human fully understands. The outcome is visible. The reason is not. What replaces the old bureaucratic “because the law says so” is something far more opaque: “because the model says so.”

This is the new illusion of intelligent states. And it is dangerous.

Governments do not fail because they lack intelligence. They fail when they lose legitimacy. And legitimacy depends on one simple property: the ability to explain, audit, and justify power.

AI without traceability erodes that foundation.

The Rise of Invisible Authority

In the private sector, opacity is tolerated. If a recommendation engine misjudges your taste in movies, you shrug. If an ad-targeting system misunderstands you, you scroll past.

In the public sector, the stakes are different.

When an algorithm flags a citizen for inspection, denies a benefit, delays a permit, or assigns a risk score, it is exercising authority. Even if no human “decides” in the traditional sense, the outcome feels like a decision. And for the person affected, it is one.

Yet increasingly, no one can answer the simplest question:

Why did this happen?

Not in human terms. Not in legal terms. Not in a way that can be challenged.

The system becomes an invisible authority. It does not argue. It does not justify. It simply outputs.

In many modern public systems, artificial intelligence is introduced to handle vast volumes of declarations, transactions, and compliance records. The goal is always the same: improve oversight, accelerate processes, and reduce human error.

In practice, however, a familiar pattern emerges. Data enters the system. Models transform it. On the other side appear alerts, scores, or classifications.

And somewhere along this path, the logic disappears. No one—not the citizen, not the civil servant, not even those who designed the system—can fully reconstruct how a specific outcome emerged from a specific input.

The system “knows.” Humans merely accept.

This is not intelligence. It is authority without legibility.

Why Black-Box AI Cannot Govern

In democratic systems, the reason matters more than the result.

A citizen may accept a penalty, a delay, or a denial if they understand the grounds on which it occurred. Even unfair decisions can be contested. That possibility is what keeps power legitimate.

Black-box AI breaks this contract.

Most modern AI systems—especially those based on deep learning—do not reason in ways that map cleanly to human concepts. They learn patterns across high-dimensional spaces. Their “decisions” are emergent properties of millions or billions of parameters.

This is not inherently bad. It is often what makes them powerful.

But when such systems are embedded into governance, three fractures appear:

- Accountability evaporates.

Who is responsible for an outcome? The developer? The data scientist? The agency? The model? “The system” becomes a shield. - Appeal becomes meaningless.

You cannot argue with a probability distribution. You cannot cross-examine a vector. - Trust collapses silently.

Citizens may comply, but they no longer believe. The state becomes efficient and alien at the same time.

In practice, what happens is subtle. The system is introduced as “decision support.” Over time, human operators learn to trust it more than themselves. Eventually, the output becomes the decision.

And yet, no one can say why.

A government that cannot explain itself cannot govern in the long term. It can only administer.

Traceability as Democratic Infrastructure

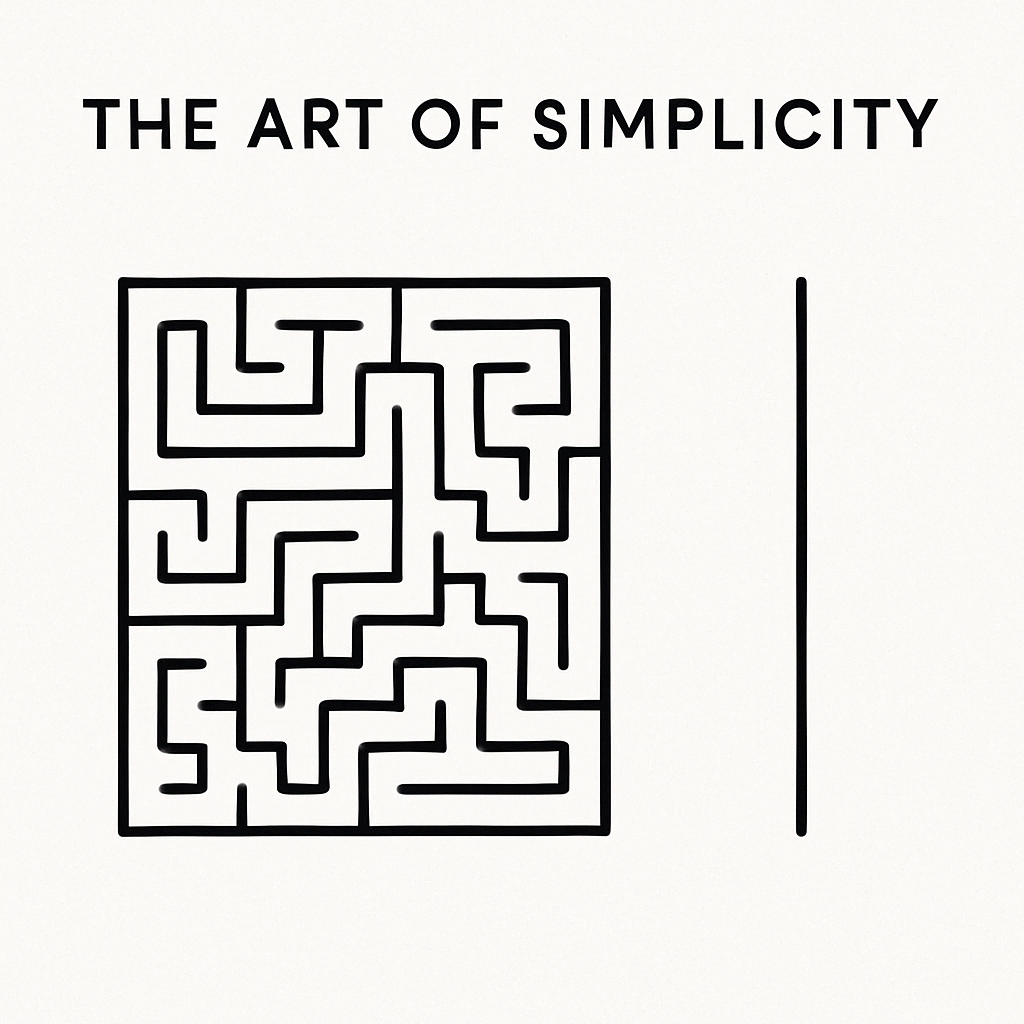

We often speak of AI in terms of intelligence. We should speak of it in terms of infrastructure.

Roads make movement possible.

Electricity makes industry possible.

Traceability makes legitimacy possible.

In any system that exercises public power, every outcome must be:

- reconstructable

- auditable

- explainable in human terms

Not merely “the model had high confidence,” but:

- What data was used?

- Which rules applied?

- What transformations occurred?

- Where did uncertainty exist?

- At which point could a human intervene?

Traceability is not a feature. It is an architectural principle.

It means designing systems where every automated step leaves a trail:

- immutable logs

- versioned models

- input snapshots

- rule evaluations

- decision paths

It means that an outcome is not just a number or a label, but a story—a chain of reasoning that can be inspected.

In one compliance platform I helped design, the core requirement was not speed or scale. It was this:

Every result must be reproducible years later, even if the system itself has changed.

Why? Because the system was shaping legal reality. It was producing records that could be contested in court, audited by regulators, and challenged by citizens.

Without traceability, such a system would not be a tool of governance. It would be an oracle.

And oracles do not belong in democracies.

From “Smart Government” to “Legible Government”

The dominant narrative is “smart government.”

Smarter cities.

Smarter taxation.

Smarter welfare.

Smarter policing.

But “smart” is the wrong goal.

What societies actually need is legible government.

A legible system is one that:

- can be read

- can be questioned

- can be understood by non-experts

- exposes its logic instead of hiding it

Legibility is what allows citizens to remain political actors instead of becoming data points.

AI can support this. But only if its role is reframed.

Instead of asking:

How can AI decide better than humans?

We should ask:

How can AI help humans see more clearly what is happening?

AI should illuminate complexity, not replace judgment.

It should surface patterns, not impose outcomes.

It should extend human reasoning, not obscure it.

In this model:

- AI proposes

- Humans decide

- Systems record

- Everyone can audit

This is slower. It is messier. It is less “impressive” in demos.

It is also sustainable.

Intelligence Without Legitimacy Fails

History is full of efficient systems that collapsed because they lost trust.

Empires with perfect logistics.

Bureaucracies with flawless procedures.

Institutions that “worked” while people stopped believing in them.

AI will not save governments from this fate. It will accelerate it if misused.

A state that cannot explain its actions will eventually face a population that no longer accepts them. The conflict may not be loud at first. It begins as disengagement, cynicism, and quiet resistance.

“Nothing makes sense anymore.”

“They decide somewhere else.”

“It’s just the system.”

These are not technical failures. They are political fractures.

AI without traceability creates power without voice.

Efficiency without legitimacy.

Intelligence without democracy.

Governments do not need machines that think for them.

They need systems that allow everyone to see how power flows.

Because in the end, the question is not whether AI is smart.

It is whether the society using it remains intelligible to itself.