Control as a Modern Comfort

Modern digital systems promise something deeply attractive: control.

Dashboards glow with metrics. Automations hum silently in the background. Alerts reassure us that “everything is under monitoring.” Buttons exist for every action. Logs exist for every event. Reports exist for every decision.

And yet — when something goes wrong — the truth is often brutal:

no one was really in control.

Outages cascade across systems that were supposedly “redundant.”

AI models behave in unexpected ways.

Data pipelines produce results no one fully understands.

Critical decisions are “explained” after the fact, not before.

This is not a failure of technology.

It is a failure of how we understand control in digital systems.

The Control Myth: Visibility ≠ Authority

One of the most persistent misconceptions in digital transformation is the idea that visibility equals control.

We assume that because:

- we can see metrics,

- we can query logs,

- we can inspect dashboards,

we therefore govern the system.

In reality, visibility often arrives after complexity has already escaped human comprehension.

A system can be:

- fully observable,

- richly instrumented,

- exhaustively logged,

and still be operationally uncontrollable.

Why?

Because control requires predictability, not just observation.

Complexity Is Not the Enemy — Unacknowledged Complexity Is

Digital systems today are not complicated — they are complex.

There is a critical difference:

- Complicated systems can be understood by decomposition

- Complex systems exhibit emergent behavior

Cloud-native architectures, AI pipelines, distributed event-driven systems, and automation networks all belong to the second category.

They behave more like ecosystems than machines.

Yet we continue to manage them as if:

- every cause has a single effect,

- every failure has a single root,

- every system has a single owner.

This mismatch creates the illusion of control.

Automation: The Great Amplifier of False Confidence

Automation is often sold as a way to reduce human error.

In practice, it often moves error upstream, hides it, and amplifies its consequences.

When humans operate manually:

- mistakes are visible,

- inconsistencies are obvious,

- friction slows damage.

When automation operates:

- mistakes scale instantly,

- inconsistencies propagate silently,

- damage accumulates before detection.

The illusion emerges when organizations believe that:

“Because the system runs automatically, it is under control.”

In reality, automation reduces intervention, not responsibility.

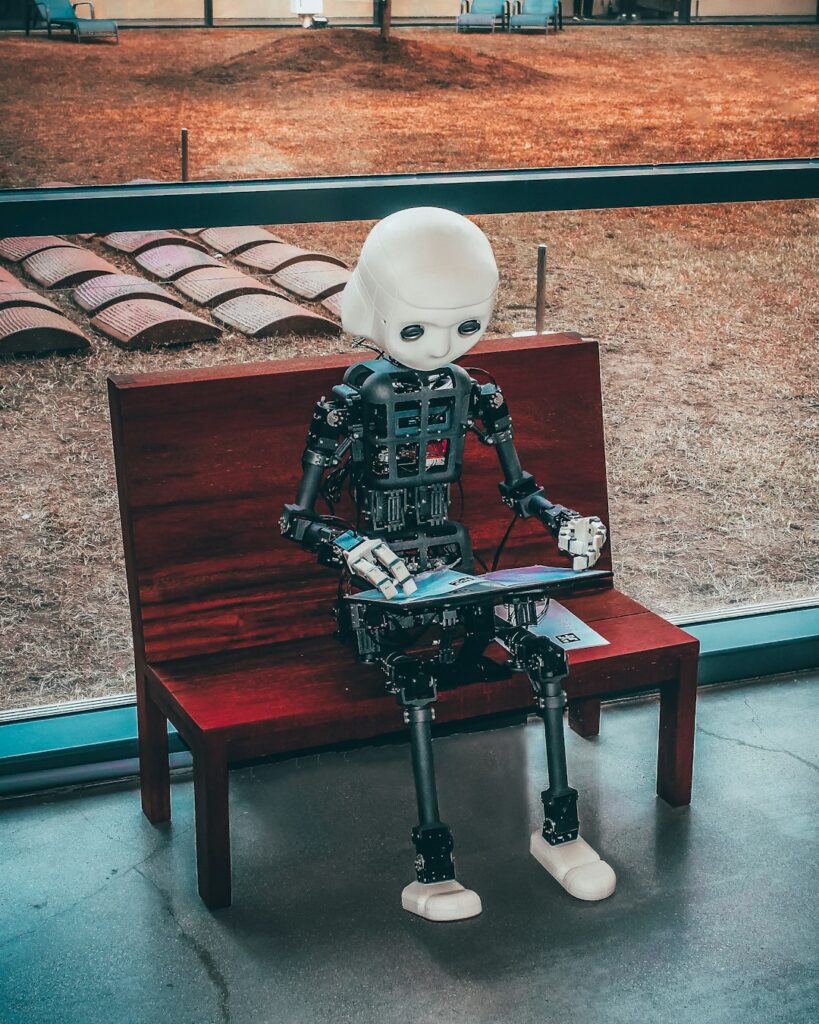

AI Systems and the Displacement of Agency

AI introduces a deeper illusion: decision control without decision ownership.

Models produce outputs that:

- look authoritative,

- sound confident,

- arrive instantly.

But:

- who understands the full decision boundary?

- who validates training assumptions continuously?

- who is accountable when outcomes drift?

Many organizations treat AI as:

“An intelligent black box we supervise.”

In practice, AI often becomes:

“A silent decision-maker we justify retroactively.”

Control without understanding is not control — it is delegation without governance.

Dashboards: The New Control Theater

Dashboards are the modern control room — but many are closer to theater than command.

Common symptoms:

- KPIs chosen for reporting convenience, not system health

- Metrics optimized for presentation, not truth

- Green indicators masking structural fragility

Dashboards tend to answer:

“Is the system currently within expected ranges?”

They rarely answer:

“Can this system fail catastrophically tomorrow?”

Control theater feels safe — until reality interrupts the performance.

When Systems Obey Rules but Betray Intent

One of the most dangerous illusions is believing that rule-based systems guarantee correct outcomes.

Digital systems do exactly what they are designed to do — not what we intended them to do.

If:

- business logic is fragmented,

- rules are inconsistent,

- exceptions are patched instead of modeled,

the system remains obedient — and wrong.

This is why many failures are technically “correct” and operationally disastrous.

Compliance: Control’s Most Misunderstood Substitute

Regulatory compliance is often mistaken for operational control.

Compliance answers:

- “Did we follow the rules?”

Control answers: - “Can we prevent harm?”

A system can be:

- fully compliant,

- perfectly documented,

- legally defensible,

and still be operationally unsafe.

True control is proactive.

Compliance is retrospective.

Confusing the two creates institutional blind spots.

Why Humans Feel Less in Control Than Ever

Ironically, as systems become more sophisticated, human confidence decreases.

Not because people are less capable — but because:

- decision chains are opaque,

- causality is delayed,

- responsibility is diffused.

People are asked to:

- trust outputs they cannot explain,

- approve actions they cannot simulate,

- own results they cannot influence.

This is not empowerment.

It is managed helplessness.

Control Is a Design Property, Not a Feature

Real control does not emerge from:

- more dashboards,

- more automation,

- more AI.

It emerges from intentional system design.

Systems that support real control share common traits:

- explicit domain models

- clear ownership boundaries

- reversible actions

- traceable decision paths

- built-in friction where harm is possible

Control is not about speed — it is about recoverability.

The Paradox: Less Control Surfaces, More Actual Control

Counterintuitively, systems that provide:

- fewer buttons,

- fewer overrides,

- fewer “emergency fixes”

often offer more real control.

Why?

Because:

- constraints are explicit,

- behavior is predictable,

- failure modes are understood.

Control thrives in systems that accept limits.

Reframing Control for the Digital Age

The future of digital systems does not belong to those who promise:

“Total control.”

It belongs to those who design for:

- bounded autonomy

- human oversight with teeth

- systems that fail visibly, not silently

- responsibility that cannot be abstracted away

The illusion of control is comforting.

But comfort is not safety.

Closing Thought: Control Is Not Power — It Is Accountability

In digital systems, control is not about dominance.

It is about owning consequences.

If no one can:

- explain why the system behaves as it does,

- intervene meaningfully when it drifts,

- accept responsibility when it harms,

then control never existed — only the illusion of it.

And illusions, in complex systems, are the most dangerous failure mode of all.